Judgment isn't uniquely human

Neither is taste. Why do we keep making this mistake?

In January 2022, Meta’s Chief AI Scientist made an expensive error.

Yann LeCun believed that models like GPT-3, trained only from text, couldn’t actually be intelligent. How could they possibly learn about real-world physics? If a plate sits atop a table, and you push the table, what happens to the plate? Not even GPT-5000 would be able to learn this, he declared. Two months later, GPT-3.5 proved him wrong. Meta eventually spent billions trying to catch up.1

Incorrectly asserting AI’s limits is very common, though not usually so pricey.

The most recent assertion comes from a New York Times Op-Ed, which says that judgment “is a uniquely human skill,” one that “cannot be automated – at least not any time soon.”

I understand the difficulty of anticipating AI progress, but the author is just mistaken: AI can already do what he claims it cannot. And even if AI couldn’t already do this, there’s no intrinsic barrier to learning judgment: It is a skill gleaned from feedback and experience — not magic.

I wouldn’t ordinarily worry about a single Op-Ed, but it reflects a troubling pattern, one that sets us up to be blindsided when AI ‘unexpectedly’ gains abilities we’d ruled out. Smart people keep asserting mistaken limits about AI. Why?

AI already has the judgment the author claims it lacks

Let’s start with the Op-Ed before broadening into ‘AI could never’-ism more generally.

By judgment, the author means “the capacity to arbitrate among competing values and differences of opinion,” especially when “trade-offs are unavoidable and the right answer is not waiting to be computed.” In his telling, this is the domain of humans alone.

As evidence of AI’s limits, the author discusses his work in investment banking: His team fed data to AI in order to propose a price for buying a company. According to the author, AI’s suggested bid was too low; it did not account for the frosty relationship between the CEOs. The company thus rejected their first offer, but later agreed when offered more money: “The model could not account for the interpersonal dynamics. Judgment could.”2

I’m not compelled by the causality here. But the author is also just mistaken: AI can already make judgments like this.

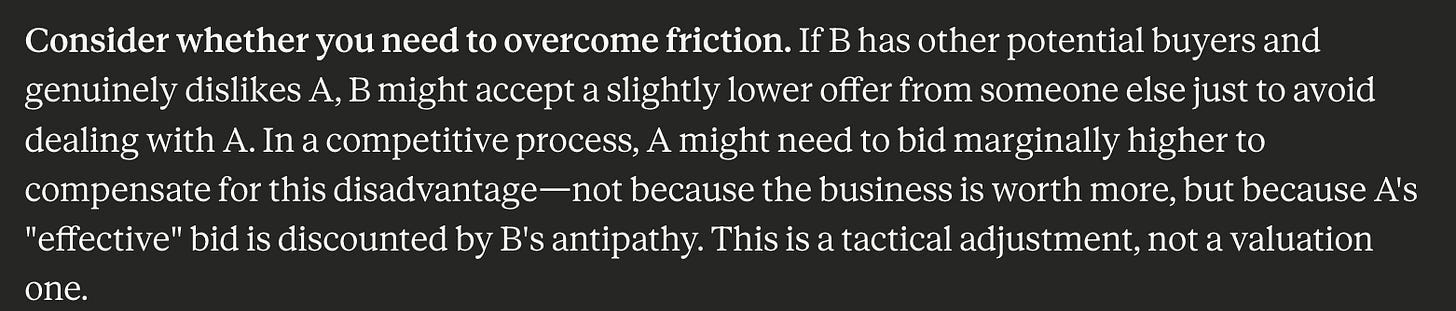

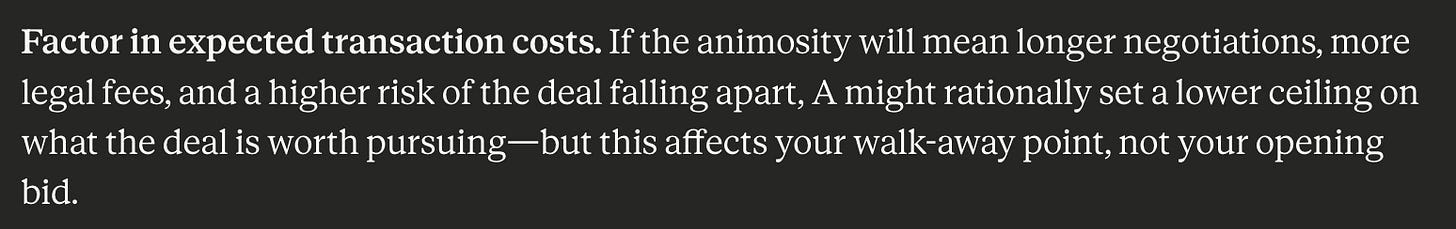

Ask Anthropic’s latest model about whether CEO animosity affects acquisitions, and you’ll get an excellent answer: The offer price might need to be raised, especially in a competitive bid. Dislike might entail higher transaction costs (e.g., longer negotiations, higher legal fees), which should reduce the buyer’s maximum willingness-to-pay. Some acquisition formats should be avoided because they entail an ongoing relationship. I could go on.

Financial judgment is one thing, but what about sensitive interpersonal matters? The author speaks admiringly of old-timey British judges, who resolved cases amid conflicting testimony and records — demonstrating what he calls “the skills to make things better where machines cannot.” But the issue is: AI absolutely has judgment like this too, and the author doesn’t engage with any studies examining this.

Two studies, published in the prestigious journals Science and Nature, found AI to outperform humans on the types of complex judgments the author admires. In one, AI-powered mediation outdid human mediators in group consensus-finding. In another, participants preferred AI’s handling of ethical dilemmas — more thoughtful, trustworthy, and moral — over even the New York Times’s professional advice columnist.3

These studies tested AI systems from several years ago; today’s models are even more capable. It’s fair to say that I consider the recent Op-Ed’s claims to be on shaky ground, even though AI judgment certainly has its flaws.

But as I’ve said, a single Op-Ed isn’t especially notable. The interesting question is why claims like this continue to be made.

Why do people make incorrect claims about what AI ‘can’t do’?

People criticize AI without using frontier models

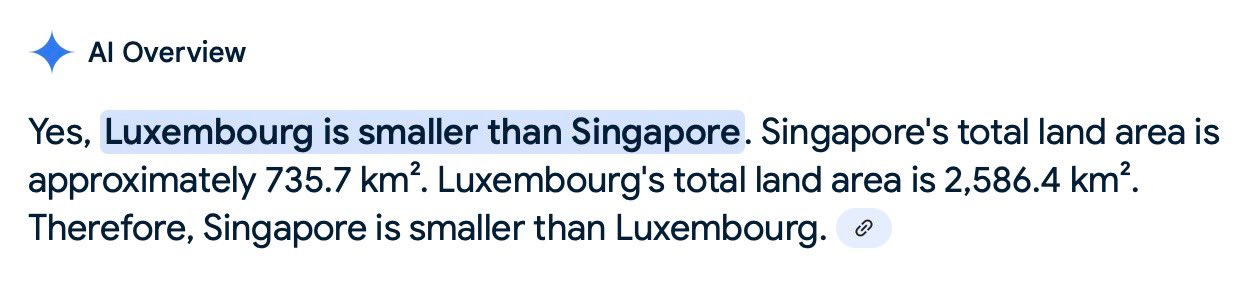

One issue is that out-of-date models are way more commonly used than the state-of-the-art. When those models falter, people interpret this as AI being weaker than they thought, when the weakness is actually in the system they used. As I’ve noted before, Google’s instant search summary is free, fast, and rather gaffe-prone.4 GPT-5.2 Pro is much better, but also much costlier and slower, so it’s rarely used.

Critics’ mistaken experiences are difficult to pin down because they often omit the specific AI system they used. For instance, in the acquisition example, the Op-Ed author references “an AI model” used “last year” — so probably not one of today’s frontier models. Likewise, in another example he mentions an “AI-assisted analysis” conducted “not long ago.” It wouldn’t surprise me if these models were far from state-of-the-art even then, let alone compared to today’s models.

Meanwhile, the AI companies keep the best models essentially all to themselves for their own internal work. Almost nobody outside of these companies — myself included — knows where the true frontier is. With that uncertainty, it’s wise to be less confident about AI’s limits.

People use flawed setups, and attribute these flaws as intrinsic weaknesses of AI

A fundamental issue with the Op-Ed’s acquisition example is that the AI doesn’t seem to have been given proper context. How could the model know about the frosty relationship between the CEOs without being told? Someone needs to either provide that information, or give AI a means of uncovering it.5

To be clear, the difficulty of getting adequate context is a real limitation for AI. But the issue isn’t a lack of judgment: It’s that humans still have advantages like noticing subtle vibes around the office, or learning gossip from watercooler chats. Sure, you could plug AI into an always-on video bot and let it wheel itself around the office, I guess. But people mostly don’t want that, and the benefits aren’t demonstrably large enough. Humans still have an edge.

Another genuine limitation is AI’s incomplete grasp of tacit knowledge—the know-how gained from experience, which often isn’t written down and so is harder to teach to AI. But lacking this experience is different from fundamentally lacking the capacity for judgment. And AI developers are working to close this experience gap.6

Though these limitations are real advantages for professionals today, I do expect the advantages will fade with time. I would recommend not relying on these to keep one’s job safe from AI indefinitely.

People overromanticize humanity’s abilities

Maybe the largest factor is that I suspect people want there to be something uniquely human, a cognitive skill that AI can’t match.

I understand the appeal — it’s comforting to believe that humans are special, immune from AI’s competition — but does the evidence support it? The Op-Ed author speaks highly of human judgment, after all, but I doubt he’d want a judge who is gripped with the pangs of hunger.7 Both humans and AIs can exert judgment, and each has their flaws, at least for today. But it’s conceivable to me that AI will vastly surpass our judgment in the near future — and ‘wishcasting’ that problem away doesn’t help us to prepare.

I see romantic notions expressed perhaps most commonly with art. Ted Chiang’s argument is that AI art can’t be interesting because art involves meaningful choices, and AI’s choices will always be average or derivative. Others claim that art made by humans will have an elusive, human spark to it, which can be felt by the observer. But if people don’t know AI art’s origin, do they actually find it uninteresting or less special?

As I see it, this belief has been thoroughly debunked: In fall 2024, 11,000 people were surveyed about 50 artworks — half AI-generated, half not — without knowing the art’s origins. The two most popular pieces were AI-generated, as were six of the top-ten total. Even the survey’s most adamant AI-art haters had this preference; their favorites were AI, too.

People also aren’t very good at telling what’s AI-generated or not. One funny example: The survey featured only one Impressionist painting that was human painted — and the respondents believed it was created by AI. All of the others, which were actually AI-generated? They were believed to be painted by humans.8

We like to think we can sense a human’s taste and judgment. Yet the novelist Vauhini Vara discovered that even her close friends — some of them professional writers — couldn’t distinguish her writing from AI-generated prose. Lines she considered hokey, her friends described as “especially your style.” What gives?

Judgment and taste are intellectual tasks, like any other

There is a long tradition of people claiming what AI will struggle to accomplish, only to move the goalposts when AI achieves this. ‘Judgment’ is merely the latest in this chain, as the AI researcher Noam Brown pointed out in response to this Op-Ed. With time, these abilities have all proven teachable.9

The Op-Ed author doesn’t seem to be properly forecasting how AI skill-learning works and how broad the intelligence capabilities are. Instead, he’s succumbing to what I call the “one-level-higher” hypothesis: that whatever AI can do, surely there will still be a role for humans one level up the value chain. When “routine analysis becomes automated,” professionals will be distinguished by their ability to “synthesize across domains.” Someone will need to “decide whether an analysis is trustworthy, what implications it carries and whether acting on it would be wise.” And so on.

These beliefs would be convenient, but I don’t see why they’re true. ‘Spotting connections across domains’ and ‘considering the implications of analysis’ are both abilities that humans learn through experience and feedback.10 Without invoking something mystical about the human brain, why wouldn’t AI be capable of learning these too? And once AI clearly demonstrates these abilities, might businesses then hone in on AI as the cost-effective option for all these types of work, leaving neither for humans?11

The role of human judgment, specifically

I’ve been critical of the Op-Ed, but it does prompt a question for me, one that’s worth separating from the Op-Ed’s empirical claims: Even if AI can apply judgment, where should we still insist on humans remaining responsible?

Fundamentally we have a choice about the society we want to build. Even if AI can validate whether an analysis is trustworthy, we might want people who feel dread in their stomach about making a bad call, and thus feel spurred to really get the details right. But at what gap in abilities would it be negligent to favor one’s own judgment over that of the automated system?

Or, consider jobs where some people prefer to interact with human workers. That preference is legitimate, but how should we weigh it in contexts like professional drivers, where errors weigh heavily on third-parties?

I don’t have simple answers here, but I’m confident that doubting AI’s future abilities won’t help us navigate the coming tradeoffs. To shape the future we want, it’s better to clearly acknowledge the abilities that AI may soon have — and then consciously decide where we prefer humans anyway.

Acknowledgements: Thank you to Dan Alessandro, Michael Adler, Michelle Goldberg, and Sam Chase for helpful comments and discussion. The views expressed here are my own and do not imply endorsement by any other party.

If you enjoyed the article, please give it a Like and share it around; it makes a big difference. For any inquiries, you can get in touch with me here.

The video of Yann saying this is here. One possible steelman of Yann’s view is that he was doubting an AI could learn this because no text describes that scenario — but maybe he was mistaken and such text does exist, and that’s how AI learned what happens to an object atop a table. But I’m not compelled by this explanation, based on the wide range of abilities we’ve since seen from AI, which go beyond what’s directly described in its training data.

For more on the costs of Meta’s attempted catch-up, see e.g., “Meta’s New Superintelligence Lab Is Discussing Major A.I. Strategy Changes.”

The full quote is:

Last year a board wanted to know how much it should offer for a business it wanted to buy. We fed a huge amount of data into an A.I. model, which suggested a valuation that seemed low to me. The chief executives of the two companies had a fraught relationship, and we debated whether the deal price would need to be higher. Sure enough, when the company offered the A.I.-recommended price, it was rejected. The higher price was later accepted and the transaction secured. The model could not account for the interpersonal dynamics. Judgment could.

The mediation study is here. The ethical dilemmas study is here. To be clear, I would buy that humans at their worst still outperform AI at its worst, given the erraticism of some AI outputs. But clearly there is some meaningful AI judgment to be grappled with, if AI is on-average outperforming these humans.

I am making an inference here that the AI was fed only financial data, rather than qualitative information like this; the Op-Ed is not clear on this point.

Could the AI have determined the relationship’s frostiness without being told directly? Perhaps if the AI had access to web-search and if the rivalry was famous, then it may have known anyway. But this is difficult to gauge without knowing what AI tool was used and how. Another option is to equip AI with ways to surface not-yet-vocalized issues, like being able to query executives about any concerns with the deal.

Certainly some AI systems could also flag this as an unknown unknown from the outset: When I ask ChatGPT about qualitative factors generally for an acquisition, it does talk about what’s necessary for “winning the deal,” as well as how the CEOs’ psychology will factor in (e.g., is the seller happy to be riding off into the sunset, or will they miss the control?).

Indeed, the AI companies believe tacit knowledge can be taught: People can now get paid to think aloud while working, to screen-record their computer, to teach the AI the nuance of their hobbies and interests. It will take time to convert this teaching to reliable know-how, but I do think the AI companies will get there. As one example of attempting to teach tacit knowledge to AI, OpenAI has contracted with a number of ex-investment bankers to improve their models’ finance-related abilities.

There is a famous statistical finding about judges being stricter on parole decisions made right before mealtime, but it’s unclear whether this is a statistical artifact, or a true causal relationship. Still, I wouldn’t want to take this risk if I could avoid it.

Funnily enough, one person the survey conductor spoke with is extremely good at identifying AI-generated art, and said their key is that the details in AI-generated art don’t make much logical sense. In other words, if you look closely, the scene feels incoherent. This is actually very close to the argument made by Chiang, that art is made up of choices that should all fit together.

And yet, the lack of coherence doesn’t hugely impact the emotional experience for many observers. In the survey, the average participant was correct 60% of the time in identifying whether art was AI-generated, compared to the 50% we would expect from chance. This percentage was slightly higher for people who profess to hate AI-generated art (64%), are professional artists (66%), or both (68%), but it is far from having a perfect eye.

In 1989, Garry Kasparov was asked whether there will one day be a computer that is world champion. Two Grand Masters had already lost to computers. Kasparov said:

Ridiculous! A machine will always remain a machine, that is to say a tool to help the player work and prepare. Never shall I be beaten by a machine! Never will a program be invented which surpasses human intelligence. And when I say intelligence, I also mean intuition and imagination. Can you see a machine writing a novel or poetry? Better still, can you imagine a machine conducting this interview instead of you? With me replying to its questions?’

Within a decade, Kasparov lost his match to Deep Blue, and I expect there will never again be a human who is better than the strongest chessbots.

I’m not sure of sourcing on AI ‘never’ beating humans at Go, but in 1997, Piet Hut put it this way:

It may be a hundred years before a computer beats humans at Go -- maybe even longer. If a reasonably intelligent person learned to play Go, in a few months he could beat all existing computer programs. You don't have to be a Kasparov.

Less than twenty years later, AlphaGo defeated one of the world’s top players, Lee Sedol, in a five-game match. After the first game in the match, Ke Jie — the world’s #1 player — declared that AlphaGo might beat Lee Sedol but wouldn’t beat him. AlphaGo went on to beat him the following year.

In 2015, Chris Moorman — whom Wikipedia describes as “the all-time leader in career online poker tournament earnings” — is recounted as having said:

Moorman guaranteed to me that while a computer can beat the best in the world in a poker cash game with limits, this would never happen in no limit tournaments. There are too many variables for a computer to process, and the most important of them are human factors.

I’m not sure of sourcing of IMO Gold specifically being impossible because reasoning is uniquely human, but there is no shortage of articles making the claim that AI can’t reason. About a month and a half before Google DeepMind and OpenAI achieved Gold-medal IMO scores, it was not uncommon to see articles like “A knockout blow for LLMs?” which called reasoning “so cooked.”

I think AI’s ability to solve these IMO problems either means that it can reason, or that ‘reasoning’ is being used very differently than it is used in normal conversation — albeit yes AI does not reason with 100% accuracy.

When I worked in management consulting a lifetime ago, the accepted wisdom was that people learn these skills best through apprenticeship — watching more experienced consultants tackle problems. The shift to remote work during COVID seemed to bear this out: Friends in consulting tell me it was harder for early-career analysts to develop these skills solo, now that they spent less time with senior teammates.

It’s tempting to envision a “centaur economy,” where humans and AI team up to accomplish what neither could alone. Still, human contributions might eventually become net-negative. In chess, there was only a brief window when human-AI pairs were the best players in the world. At any given ability-level, we should also expect AI to become progressively cheaper, absent major production shocks, like a war. The question, of course, is what other types of work remain for humans in this world; if there are other options, it is less concerning that these particular tasks have become automated.

Extremely smart article, love this! Great insights.

Agreed! I've seen this phenomenon called remainder humanism by Leif Weatherby