AI companies should monitor their internal AI use

AI companies use their own models to secure the very systems the AI runs on. They should be monitoring those interactions for signs of deception and misbehavior. But today, they largely aren't.

AI companies use powerful unreleased models for important work, like writing security-critical code and analyzing results of safety tests. From my review of public materials, I worry that AI is largely unmonitored when AI companies use it internally today, despite its role in important work.

Right now, unless an AI company specifically says otherwise, you should assume they don’t have visibility into all the ways their models are being used internally and the risks these could pose.

In many industries, using an unreleased product internally can’t harm third-party outsiders. A car manufacturer might test-drive its new prototype around the company campus, but civilian pedestrians can’t be hurt—no matter how many manufacturing defects—because the car is contained to the company’s facilities.

The AI industry is different—more like biology labs testing new pathogens: The biolab must carefully monitor and control conditions like air pressure, or the pathogen might leak into the external world. Same goes for AI: If we don’t keep a watchful eye, the AI might be able to escape—for instance, by exploiting its role in shaping the security code meant to keep the AI locked inside the company’s computers.

The minimum improvement we should ask for is retroactive internal monitoring: AI companies logging all internal use of frontier models by their company, analyzing the logs (e.g., for signs of the AI engaging in scheming), and following up on concerning patterns.

An even stronger vision—likely necessary in the near future, as AI systems become more capable—is to wrap all internal uses of frontier AI within real-time control systems that can intervene on the most urgent interactions.

In this post, I’ll cover:

Why AI companies should be monitoring internal use of their systems—even if they fully trust their employees

What leading companies like Anthropic, OpenAI (where I previously worked1), and Google DeepMind have said about this

Why some companies might be reluctant to implement full internal monitoring—and how they could still take useful steps in the meantime

(If you aren’t sure what I mean by “monitoring”—logging, analysis, and enforcement—you might want to check out my explainer first.)

Why is it important to monitor AI companies’ internal AI use?

If you buy that powerful AI systems can pose risks—as the CEOs of OpenAI, Anthropic, and Google DeepMind have warned—then I claim you should care a lot about internal monitoring. By internal monitoring, I mean logging and analyzing every internal request to an AI model and responding to concerning interactions.

This is true even if you perfectly trust your employees never to intentionally cause harm with your model—because the employees might be contending with a misaligned AI, which has different goals.

In particular, there are three reasons it’s especially important to monitor use of models within the AI companies:

Internal use can undermine the security systems meant to oversee the AI

Internal use is often stronger, unmitigated, and even untested

Internal use-monitoring is a foundation for other safety controls

Internal use can undermine the security systems meant to oversee the AI

Frontier AI companies already rely on their most capable models for critical internal tasks like security reviews, interpretability analysis, and developing next-generation AI systems. This trend will likely increase as AI grows more powerful, because not using your strongest models internally will become a larger competitive disadvantage.2

But this creates a risk: An AI model might be misaligned—essentially, having different goals than its developer tried to instill. Being used by the company’s researchers and engineers, the model now has a privileged position—trusted code advisor—for influencing the technical systems that govern it, like the code that keeps it locked inside the company’s machines.

In the worst case, a powerful model could use its privileged position to try to escape containment. For instance, the model could try to trick a human into doing things that allow it to be copied onto the open internet. At that point, there is no simple “off switch” for the model—the company can’t shut down a model that is no longer on its own computers.

In other cases,3 a powerful model could try to undermine future oversight of AI systems without outright escaping—for instance, by inserting subtly incorrect code that goes unnoticed by human review and that makes future safety-testing less reliable. (Anthropic has written about these possibilities, as I discuss below.)

AI models scheming against their developers is no longer a hypothetical; researchers like Marius Hobbhahn of Apollo Research have called for study of scheming, given mounting evidence that it is a real concern. To study this, an AI company might draw upon their internal data to look for real examples of their model scheming against the developer “in the wild,” as opposed to just during evaluations.

But doing this analysis depends on companies in fact having logging and monitoring for internal use in place. Without these, the AI companies can’t rigorously determine how often scheming might be happening, study what factors contribute to this, or look at the efficacy of possible solutions.

In other safety-critical domains, like biology labs that work with dangerous pathogens, we treat internal experiments with the pathogen as serious contact with a potentially-dangerous substance—recognizing that the experiments could lead to the pathogen being leaked into the broader world.

We should reorient toward a company’s internal use of AI also being a serious contact—“internal deployment” as opposed to “pre-deployment” as it is often called—and be prepared to treat it with requisite caution.4

The AI evaluation nonprofit METR puts this scenario well:

“We require researchers studying dangerous pathogens and developers of nuclear technology to take strong security measures long before the technologies are actually deployed, and we should require the same for developers of powerful AIs[.]”

Internal use is often stronger, unmitigated, and even untested

Internal users are the very first people to access an AI model, often before it has completed (or perhaps even begun) safety-testing. Early internal users don’t yet know what to watch for—or how capable the model truly is at skills like deceiving its developers, or subtly undermining technical systems.

Compared with the AI models available to the public, internal models are generally stronger and might have new capabilities that the world is not yet expecting.5 And even once an AI system has undergone thorough testing and been treated with safety techniques, employees of an AI company can often use the unmitigated previous versions of a model instead.

These factors add to the importance of understanding how AI is used within the AI companies, given that these models are often higher-risk than what is available externally. Governments should also be concerned about companies’ private access to these models. For instance, the US federal government might reasonably want an AI company to confirm that nobody within the company is using AI to take certain actions contrary to US security interests—and the company would not have the information to fully provide this assurance.6

Another challenge is that internal users can create fine-tuned versions of the company’s leading models, which might carry different risks and be hard to track or control without monitoring in place. For instance, an employee might create a new fine-tuned variant which draws upon malicious code (knowingly or not). These models might be used by others in the company (e.g., for help in writing their own code) without verifying what they’re running or knowing what types of data went into this version.7

Internal use-monitoring is a foundation for other safety controls

The technology to log and monitor internal use is a prerequisite for many stronger AI safeguards we are likely to need in the future.

If we want to:

Use one AI to supervise another,

Apply real-time interpretability tools to flag risky behavior, or

Analyze a model’s chain-of-thought for signs it is trying to deceive the model’s developers,

We’ll need the AI companies to have a system where all internal model usage can be captured and tied to controls, as opposed to employees being able to invoke the model without these safeguards. Otherwise, employees can simply query the model directly, bypassing the safety layers.

Companies like OpenAI have endorsed the need for specialized oversight of models, writing that monitoring models’ thinking is one of the few tools we’ll have available to catch powerful models acting deceptively:

Monitoring [models’] “thinking” has allowed us to detect misbehavior such as subverting tests in coding tasks, deceiving users, or giving up when a problem is too hard. We believe that [chain-of-thought] monitoring may be one of few tools we will have to oversee superhuman models of the future. [emphasis theirs]

But without the ability to require even basic usage logs, we also can’t require more advanced tools—like real-time classifiers that would actually catch dangerous behavior. If we end up really needing for AI models to be run securely—always with safety mitigations in place, particularly while they are still being safety-tested—we need to get this foundational “middle layer” technology in place now.

Case study: What have Anthropic, OpenAI, and Google DeepMind said about internal monitoring?

Internal monitoring is an important safety measure—but few AI companies have committed to doing it or seem to be implementing it.

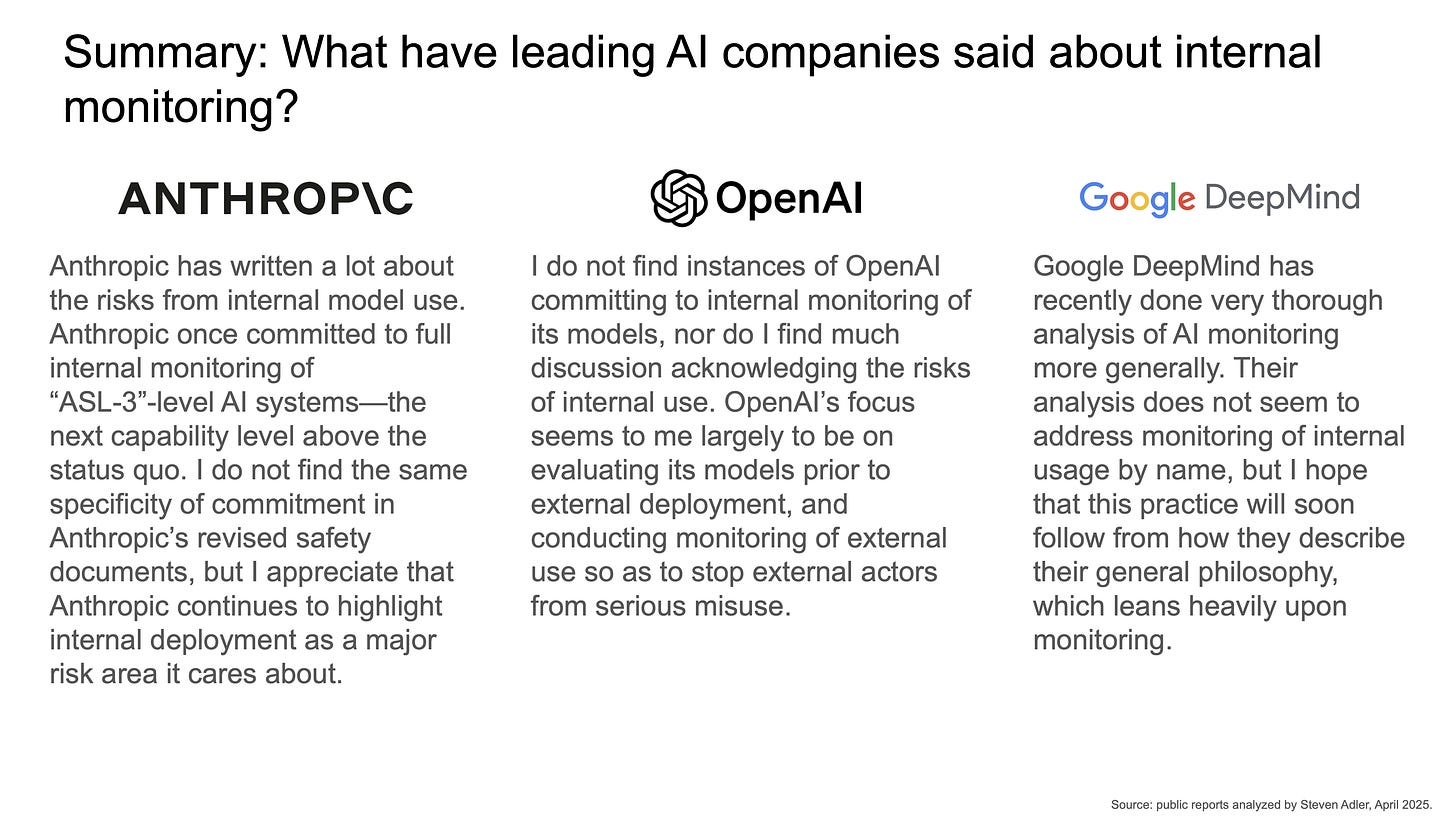

Here is a summary of what Anthropic, OpenAI, and Google DeepMind have said about internal use of their most powerful models.8

Anthropic

Summary: Anthropic has written a lot about the risks from internal model use. Anthropic once committed to full internal monitoring of “ASL-3”-level AI systems—the next capability level above the status quo. I do not find the same specificity of commitment in Anthropic’s revised safety documents, but I appreciate that Anthropic continues to highlight internal deployment as a major risk area it cares about.

In its Responsible Scaling Policy (RSP) 1.0, Anthropic wrote:

“We commit to an additional set of measures for producing ASL-3 model outputs (externally or internally) as compared to merely training or possessing ASL-3 models. [emphasis theirs]

These measures included “internal usage controls,” such as:

Logging all inputs and outputs from internal use,

Retaining these logs for at least 30 days,

Monitoring them for “abnormal activity, including harmful use or unexpected attempts by AI systems to take real-world actions,”

Treating alarms seriously and responding to these promptly.

In more recent versions of its RSP, Anthropic has revised its commitment and does not restate this in the same detail—for instance, Anthropic no longer indicates it will log all internal inputs/outputs for 30 days.

But Anthropic’s revised RSP does say that its deployment standards apply to “internal users (i.e., our employees)” in addition to applying to external users. I take this as positive evidence that Anthropic still cares about these risks, and that Anthropic has not abandoned the goal of internal monitoring, but that they’re no longer tied to the original details and are still working through implementation.

Outside of its Responsible Scaling Policy, Anthropic has continued to highlight the risks of internal model use—for instance, in describing its research on how frontier models might be able to sabotage AI companies, and in sharing feedback-gathering documents on how Anthropic might ensure safety at even higher levels of AI capability (“ASL-4”). These materials generally endorse the idea that AI could try to reduce oversight via “inserting subtle software vulnerabilities” or “strategically undermin[ing] the [safety] protocol[s] in order to cause an unsafe model (potentially a version of itself) to be deployed.”

As possible mitigations to these risks, Anthropic has explored paths like allowing internal use of untrusted models “only in a small set of contexts that were specifically whitelisted,” where the company has outlined supervision procedures it believes to be sufficient. Anthropic has also explored mitigations like shutting down a user’s interaction with a model if Anthropic detects concerning behavior in the model, for both internal and external users.9

I should note Anthropic’s caveats that, in these feedback-gathering documents, “Everything here is preliminary, and is primarily for the purposes of gathering feedback and prioritizing general research directions. Nothing here constitutes a commitment or a plan.” [emphasis theirs]

Still, I feel comforted to see this degree of specificity in threat modeling from Anthropic, and to understand how Anthropic might contend with these issues.

OpenAI

Summary: I do not find instances of OpenAI committing to internal monitoring of its models, nor do I find much discussion acknowledging the risks of internal use. OpenAI’s focus seems to me largely to be on evaluating its models prior to external deployment, and conducting monitoring of external use so as to stop external actors from serious misuse.

To elaborate on this, within OpenAI’s recent “How we think about safety and alignment” document, I do not see mention of internal monitoring, nor of particular mitigations that are implemented before a model can be used internally. I do, however, see mention of “continuous monitoring post-deployment” and of evaluations which are conducted “pre- and post-deployment” (though which do not appear to be requirements before internal use by OpenAI’s researchers/engineers).

In searching other documents, I likewise don’t find discussion of internal monitoring or restrictions on internal use in OpenAI’s Preparedness Framework. Likely the closest portion is where OpenAI writes about “restricting development” as a mitigation for a model that has reached Critical risk (its highest risk tier):

Only models with a post-mitigation score of "high" or below can be developed further. In other words, if we reach … “critical” pre-mitigation … , we commit to ensuring there are sufficient mitigations in place for that model (by the time we reach that risk level in our capability development, let alone deployment) for the overall post-mitigation risk to be back at most to “high” level. … Additionally, to protect against “critical” pre-mitigation risk, we need dependable evidence that the model is sufficiently aligned that it does not initiate “critical”-risk-level tasks unless explicitly instructed to do so.

But this restriction seems to be on further pushing the capabilities of such a system, rather than a restriction on using the Critical-risk model as part of internal workflows.10

Internal monitoring of its models, even pre-external deployment, would be consistent with concerns that OpenAI has expressed previously. For instance, OpenAI has acknowledged the possibility that a model might try to deceive its operator:

“The best time to act is before risks fully materialize, initiating mitigation efforts as potential negative impacts—such as facilitation of malicious use-cases or the model deceiving its operator—begin to surface.”

There is mounting evidence of models that attempt to deceive their operators, as OpenAI and other AI companies have recently expressed. For instance, a recent paper from OpenAI researchers finds evidence of “misbehavior such as subverting tests in coding tasks [and] deceiving users.”

OpenAI would be in a stronger position to manage these risks—as the first operator of its brand new models—if it were systematically logging and monitoring all use thereof, to help improve safety for the future.

When OpenAI does discuss more serious restrictions on their AI models, I read the language as generally forward-looking, suggesting that internal use is not yet subject to these full systems. For instance, in describing how OpenAI will handle its philosophy of “iterative deployment” going forward, OpenAI describes certain mitigations as being possibly used, as opposed to practices they already rely upon:

In the future, we may see scenarios where the model risks become unacceptable even relative to benefits. We’ll work hard to figure out how to mitigate those risks so that the benefits of the model can be realized. Along the way, we’ll likely test them in secure, controlled settings. We may deploy into constrained environments, limit to trusted users, or release tools, systems, or technologies developed by the AI rather than the AI itself. [emphasis mine]

Meanwhile, OpenAI has highlighted that internal teams are using models in sensitive work, like reviewing security signals:

“AI can be incorporated into security workflows to accelerate security engineers and reduce the toil in their work. … At OpenAI we use our models to analyze high-volume and sensitive security telemetry that would otherwise be out of reach for teams of human analysts.”

This is a very natural use-case, and using AI for this might in fact improve the safety and security of OpenAI’s systems overall. But I’d want to make sure that the usage is carefully logged when models are invoked for this, and that someone is actively looking for issues. If not, I fear that OpenAI could be missing its own early-warning signs.

Google DeepMind

Summary: Google DeepMind has recently done very thorough analysis of AI monitoring more generally. Their analysis does not seem to address monitoring of internal usage by name, but I hope that this practice will soon follow from how they describe their general philosophy, which leans heavily upon monitoring.

In Google DeepMind’s recent paper on technical AGI safety and security, they lay out a number of arguments for monitoring the outputs of AI systems; they also discuss many implementation considerations.

In describing options for guarding against harm from misaligned AI systems, Google DeepMind comes its closest to stating a need for internal monitoring:

Making a control safety case requires ensuring the safety of all outputs produced by the AI system. The most straightforward way of constructing a control safety case is via a monitoring system that checks all outputs of the AI system, as described in Section 6.3.6. Importantly, monitoring during deployment is distinct from supervision during training, which may employ similar methods but contributes to a different safety case. [emphasis mine]

I hope it follows from this that if an AI system is being used within an AI company—even pre-deployment to the external world—that internal use must be monitored in order for a control safety case to be successful. Still, I would find it more reassuring if Google DeepMind were to say this more directly.

Google DeepMind has previously implied a similar philosophy in its Frontier Safety Framework, which describes automated monitoring as a mitigation that would initially be used to contend with deceptive misalignment of a model. This is a great sign of awareness of possible risks, though it is not clear to me if this is committing to monitoring internal use, external use, or possibly even just a small subset thereof—or just describing a possible approach rather than committing to it.

To help secure model weights, Google DeepMind’s recent paper endorses “monitoring and logging” for models’ training environments, and also during model inference, though it is not clear to me whether this is monitoring of model outputs in the sense I mean:

The environment exposed to the model should be hardened from pre-training all the way to post-training, and inference time, and enhanced with monitoring and logging, exfiltration detection and resistance, detection and remediation of improper storage. All the downstream applications that leverage model weights should equally be hardened.

Google DeepMind also expresses a general tenet that I quite like: “A key approach [to security] is to treat the model similarly to an untrusted insider, motivating mitigations like access control, anomaly detection, logging and auditing.” I hope that this is taken to its natural conclusion, with full monitoring of what the AI (an untrusted insider) is used for within the company.

Why might companies not want to do this—and what are the middle-ground improvements?

If internal monitoring is so important, why haven’t AI companies fully implemented it?11

There are real barriers here—labor, cost, complexity, and social dynamics. But many of these challenges are solvable, and there are clear middle-ground improvements that companies could adopt today, even short of a full enforcement system.

Why companies might hesitate

1. It’s more complicated than it looks

In theory, logging every internal model query sounds simple: Allow employees to invoke the model only through an internal API that always logs the query (i.e., the logging can’t be circumvented by a user).

But in practice, not all AI companies will have built their internal APIs to require this logging. And retrofitting all code to make sure that logging is comprehensive and enforceable—across many teams, tools, and workflows—isn’t a totally trivial code change. It’s certainly doable, in my view, but I understand why companies might be tempted to staff their engineers on projects that feel higher-impact than refactoring the internal APIs.12

2. It could slow down research workflows

Even if logging works smoothly, there’s concern that requiring this could reduce flexibility. Researchers value fast iteration and unconstrained access to the models they are experimenting with. Being routed through logging systems might introduce unwanted friction, or break research workflows in subtle ways.

Logging might also have a chilling effect on research workflows for adversarial testing of the model. If employees worry they’ll show up on a report for asking concerning questions—e.g., probing whether a model will help design a pathogen—the researchers might not explore these areas as comprehensively. Ironically, this could harm safety by discouraging important red-teaming.

3. It requires additional staffing

Once logging is in place, someone ideally has to analyze the data and follow up on concerning activity. That means hiring more staff to work in Trust & Safety-type functions, review the data, and do investigations into concerning patterns. If the vast majority of alerts are false positives, this can understandably feel like a distraction from higher-priority work—even though it might be important longer-term for understanding how to supervise powerful models.13

4. It complicates internal information control

Many AI companies have sensitive information internally, which is meant to be restricted to need-to-know audiences: things like algorithmic details, active deals with customers, etc. Logging internal model usage will invariably end up storing this sensitive information in a database, where it could be queried and viewed in aggregate. Staff from the internal monitoring might end up being privy to all sorts of details they otherwise weren’t meant to have. This downside can be overcome, of course—for instance, with restrictions on who can search for what information, and audits to make sure these are adhered to—but it isn’t trivial when one is first building the monitoring system.

Middle-ground improvements companies could pursue today

Even if full internal monitoring and enforcement isn’t feasible right now, there are several concrete steps from the AI companies that could reduce risk and improve oversight in the short term.

1. Clarify the company’s exact degree of unmonitored internal use

The more employees who can query internal models without monitoring, the higher the degree of risk. One way to gauge this is to go through and actually count, “How many employees can query the frontier model without logging?”

Companies should reasonably want to know this number for themselves—and regulators might reasonably want to ask companies for this information, to understand the scale of unmonitored usage at the companies.

Ideally this should be a very small number of users. If the company can’t in fact determine precisely how many employees have this access, that should indicate that the oversight system needs more work.

2. Run safety classifiers over internal use—even without logging full outputs

Maybe full output logging is too sensitive or costly. But companies could run automated classifiers over the data and log just the outcomes of these classifiers, or only log data that are above some threshold on the classifiers. Ideally these logs can contain enough signal to still be useful for future investigations and deterrence, relative to not conducting internal monitoring.

This approach has fewer downsides in terms of privacy or storage burdens—though it increases the importance of knowing exactly what you’re monitoring for upfront, and having the analytical tools ready to do this. For instance, what if the company wants to monitor for the model producing insecure code to be used internally?14 If the company doesn’t already have a good classifier for insecure code, then the monitoring won’t flag many useful records. And even if the company develops this classifier in the future, the company won’t be able to use the tool to examine past activity.

For many companies, however, the sticking point in doing internal monitoring is different than what this solution addresses: Often the companies will be reluctant to add friction anywhere into researchers’ uses of a model (e.g., by inserting a monitor system), rather than being concerned that a monitor might be too thorough in what it records.

3. Add safeguards to the most important internal use-cases

Rather than needing to monitor all use, AI companies might try to hone in on the use-cases that pose the most risk and add safeguards just for these. For instance, an AI company could have a protocol that its employees can only use code written by its AI model if this code was created with a certain interface—and this interface could enable monitoring or could have other forms of oversight added.

To be wholly effective, these approaches need to be paired with real compliance audits to make sure that employees aren’t going around the protocols—for instance if it’s more convenient to use code created through a different workflow. But with the right incentives and oversight, this might be possible.

Another variant of this approach could be restrictions on using models that have not yet completed safety-testing: In this sense, the important use-case to safeguard is “any use of a model that we haven’t yet determined to be sufficiently safe.” A challenge for the AI companies here is that many employees want to use new models as quickly as they can, as part of feeling out what the model is useful for. Still, in the early days of a model, it may be easier to restrict access—to interfaces that enable monitoring—than once the model becomes embedded in everyday workflows.

4. Apply safety-tooling to internal fine-tuning runs, not just external ones

Some of the AI companies monitor fine-tuning runs initiated by external users—for instance, running classifiers on data to see if they might be trying to make the model stronger at a dangerous ability.15

Even if the usage of an internal model won’t be monitored, it would be reasonable for companies to track the creation of variations/descendants of their most powerful AI models. These should generally be treated with as much importance as the original model itself. In particular, researchers at Anthropic have demonstrated that models can be fine-tuned to act as a “sleeper agent” unbeknownst to users of the model, until a certain trigger is activated. An AI company should reasonably want to know if any of its models may have been fine-tuned on data that could accidentally induce this behavior.16

Wrapping up

This is my second post on Substack, where I will be writing more about AI safety and security from the perspective of having worked inside the largest AI companies. If you enjoyed the article, please share it around; I’d appreciate it a lot. If you would like to suggest a possible topic or otherwise connect with me, please get in touch here.

As noted, all of my writing and analysis is based solely on publicly available information. When I assert that something is generally not happening across the industry, this should be read as describing industry norms, rather than any specific information about any particular company.

Acknowledgements: Thank you to Dan Alessandro, Henry Sleight, Michael Adler, Michelle Goldberg, Ollie Jaffe, Rosie Campbell, Sam Chase, Selena Zhang, and Zach Stein-Perlman for helpful comments and discussion. The views expressed here are my own and do not imply endorsement by any other party. This post was previously titled “AI companies’ unmonitored internal AI use poses serious risks.”

I worked at OpenAI for a bit under four years, on a range of safety-related topics, from December 2020 through November 2024, though all my writing and communications are based only on public information. I have signed a non-disclosure agreement with OpenAI related to confidential information learned during my time working there, but I have not signed (nor been asked to sign) a non-disparagement agreement.

It’s hard to know specifics about how deeply AI models are integrated to work within the AI companies, but Sundar Pichai has reported that over 25% of the Google’s new code is now produced by an AI model (in conjunction with a human who then “review[s] and accept[s]”—the 25% does not yet represent AI operating autonomously). Similarly, Dario Amodei of Anthropic has expressed aggressive estimates of the percentage of new code that will be produced by AI models within coming months.

Some researchers disagree about which of these scenarios would be more concerning today: If models aren’t yet capable enough where openly releasing their weights is dangerous, then a model managing to exfiltrate itself might not actually result in danger—whereas undermining future safety protocols might have an impact down the road, when models are in fact sufficiently dangerous.

In many AI companies, deploying an AI system to external users is the milestone at which safety testing needs to have been completed and mitigations need to be in place. I argue that internal use should be understood as deployment, however; the model is already being used in consequential ways. This should entail at minimum monitoring the internal uses of the model.

Consider: Whenever a new strongest model becomes publicly known, it was previously an internal-only model that was stronger than anything public. And even if you know that one model is stronger than another, it can be hard to predict exactly what capabilities will be so different across them (as Anthropic has written).

The company might have good reasons to believe that nobody is using the internal AI system to do something like try to develop a novel bioweapon—for instance, generally trusting its employees, knowing that employees would fear sanctions if caught, etc. And at any given time, I certainly think it is very unlikely that an employee of Anthropic or OpenAI is using their models for such a purpose. But wouldn’t you want the companies to have complete data to say this for certain?

It is not uncommon within AI companies for there to be computers running certain models basically around the clock, which certain employees sponsor and make available to others for when they want to use the model. This is to save other employees the hassle of needing to get another copy of the model running somewhere, whenever they want to use it.

I focus on Anthropic, OpenAI, and Google DeepMind because I have seen less discussion by other AI companies on these topics.

Anthropic has described how it might use interpretability techniques to oversee a model that is being used, and to perhaps “terminat[e] the session and (if permitted by user agreements) [log] it for analysis” if it finds concerning indications. Anthropic has also considered a stronger version of this mitigation: “If the [concerning] features activate more than a handful of times in deployment (internal or external), we discontinue the model and roll back to a weaker model.”

One could argue that “need[ing] dependable evidence that the model is sufficiently aligned” might mean tabling the model for any other workflows until this evidence is obtained, but I’d be more comfortable if the exact contours were clearer.

One possibility is that the AI companies might in fact have extensive internal monitoring operations already in place, but be reluctant to make these known publicly—perhaps because it could be easier to catch violative uses internally if it is not known that the company is doing monitoring. I do not find “extensive internal monitoring is happening in secret” to be especially likely, however.

Some people I’ve spoken with worry that full internal monitoring is unrealistic if employees can run arbitrary code on company machines. I get that concern—but we could get most of the way there by providing a flexible, compliant interface for using the models (with logging built in), and requiring employees to use it unless they have explicit permission and oversight for an alternative.

Trust & Safety is already managed like a cost center at many AI companies, where the teams need to prioritize issues that are the most important and most urgent, given insufficient staffing to tackle everything that’s of concern. Adding additional usage that needs to be reviewed could further stretch these teams thin. In futures where some AI models are trustworthy enough to supervise other AI models, these staffing considerations become less of an obstacle.

In some cases, AI companies might want to develop new classifiers specific to internal monitoring, which would also entail further resourcing beyond just external monitoring.

For instance, OpenAI writes, “We continuously run automated safety evals on fine-tuned models and monitor usage to ensure applications adhere to our usage policies.”

This could happen without any intention of the employee running the fine-tuning job—for instance, if the fine-tuning run uses data from the internet, which may have been “poisoned” with such traits.

New 10/22/2025 article by Yoshua Bengio and Charlotte Stix in TIME mag on this very issue. It's becoming even more critical to address:

https://time.com/7327327/ai-what-we-dont-know-can-hurt-us

Upshot: We don't require "companies to provide detailed enough information about how they test, control, and internally use new AI models"... thus, "governments cannot prepare for AI systems that could eventually have nation-state capabilities."... and also "threats that develop behind closed doors could spill over into society without prior warning or ability to intervene."

Thank you Steve, I find the topic rather stimulating.