"Contain and verify" as the endgame of US-China AI competition

The competition isn't a race; it's something much harder. How do we make it go well?

Some competitions have a clear win condition: In a race, be the first to cross a finish line.

The US-China AI competition isn’t like this. It’s not enough to be the first to get a powerful AI system.

So, what is necessary for a good outcome from the US-China AI competition?

I thought about this all the time as a researcher on OpenAI’s AGI Readiness team: If the US races to develop powerful AI before China - and even succeeds at doing so safely - what happens next? The endgame is still pretty complicated, even if we’ve “won” the race by getting to AGI1 first.

I suggest two reframes on the US-China AI race:

US-China AI competition is a containment game, not a race.

The competition only ends when China has verifiably yielded on AGI development.

By “containment,” I mean that a good outcome for the US might require stopping China from ever reaching a certain level of AI capability. It isn’t enough for the US to get there first. For instance, it’s an issue if China builds AI that can disrupt US nuclear command and control even a small percentage of the time. This is true even if the US has a system that can more reliably disrupt theirs. There are some types of AI the US wants for China never to develop - and likewise, that China wants the US never to develop: the interest in containment is mutual.

By “verifiably yielding,” I mean that the US must be confident that China is not continuing to try to build powerful AI. Otherwise, China might eventually surpass US systems or incur other risks, like losing control over their AI system in the rush to catch up. Unfortunately, methods for “verifiable non-development” - confirming that another party isn’t building AGI - are very understudied. We need to invest heavily in developing these methods and creating treaties that can enforce them: Otherwise, even if we “win” the race to certain powerful abilities, we won’t have good ways to confirm that China has given up on pursuing AGI. (These methods can also be useful for slowing or avoiding the race ahead-of-time, if countries can verify that the other is not developing AGI.)

Given how high the stakes are perceived to be, getting China to yield might require the US to take a truly dominant lead. Such a dominant lead is far from assured, even if the US believes it could ultimately outrace China.

Both nations would benefit from lowering the stakes of the competition - like “hardening” the world so it’s less vulnerable to the risks of powerful AI, and cooperating on international safety standards.

Military power is a major reason for the US-China race

The central reason for US-China competition on AI is that both believe that AI could be extremely important, economically and militarily.

Of the many specific rationales for racing that have been discussed, the military considerations seem strongest.2 Senior US officials, both Democrats and Republicans, have argued it’s critical to beat China in the AI race, lest advanced AI transform the strength of China’s military. AI could “change the character of warfare for generations to come,” and “the speed of change in this area is breathtaking.”3

When we’re talking about military implications, what AI capabilities do we mean?

Developing new superweapons - As Ted Cruz has put it, “If [there are] gonna be killer robots, I’d rather they be American killer robots than Chinese.”4

Launching cyberattacks - AI might be usable for sabotaging sensitive military systems, like nuclear command and control.5

Improving intelligence-gathering - AI can make forces more effective by helping intelligence analysts collect and sift through way bigger volumes of information.

Creating other novel military technology - AI might be useful to researchers in developing new military technology, e.g. better stealth for aircraft.

Many world leaders have expressed concerns about these future possible military impacts of AI.6 But as of yet, there is no specific international law governing AI’s military applications, unlike the Biological Weapons Convention and Chemical Weapons Convention (both of which the US and China have joined).

In absence of such treaties - and perhaps even if a treaty existed - states might feel no choice but to race ahead to AGI because they fear other states won’t yield either, what I call “regretful racing.”7 States might act under this pressure even if they believe that AGI development could carry existential risks, as many leaders in AI do.8 If everyone else is racing, what choice is there?

US-China should be viewed in terms of containment, not a race

But there’s a problem with the AI race framework. It doesn’t actually help us avoid the risks we’re concerned about, or to see what’s needed for a successful endgame.

In a race, you win by being the first to cross a line.

But as I’ll explain, you haven’t defused the risks by getting to some level of AI before China. You also need to actually stop China from ever building this level of AI.

The US is reasonably worried about an AGI-empowered China, but racing faster won’t stop this from happening.

We need to view this as a containment game. To illustrate the difference, imagine a simple game like Connect Four, with a modified and neverending goal: Get four-in-a-row, but also make sure your opponent never does.

This can be much harder. If you race to be the first to get four-in-a-row, you might find you have no adequate ways of stopping your opponent from getting there too. And in the context of AI, failing to contain China’s pursuit of AGI could have severe consequences.

Three reasons to focus on containment rather than racing

The US-China AI competition isn’t won just by being first to a powerful AI system.

Leads on AI capabilities aren’t guaranteed forever; China might catch up or leapfrog us, unless we credibly make them stop development. Consider that the US has been ahead of China on AI progress at basically every point to-date, but we are still worried about China beating us to future capability levels. Therefore, even if an AI capability lead is enough to confer important advantages, continuing to hold the lead is not assured.9 Continuing to hold a lead is perhaps especially difficult because China can catch up through 1) model theft or other espionage, and 2) the relative ease of copying progress rather than leading, as rumored with DeepSeek.10

China can obtain a sufficiently powerful AI system to be troubling, even if the US’s is stronger. As mentioned previously, it’s a serious problem if China has AI systems that can destabilize our nuclear command and control systems, even if we have systems that are better at destabilizing theirs. Some powerful AI abilities are more about crossing an absolute ability threshold, rather than the relative abilities of systems. This is true of both offensive and defensive uses of AI: In either case, China having a sufficiently powerful AI can still cause problems for our plans. For instance, China’s AI system might become strong enough to defend against novel AI-powered cyberattacks that we rely on, even if the US got there first.

China pursuing AGI - even after the US has developed it - incurs possible catastrophic risks for the world, via losing control of an incredibly powerful AI system. Many US AI leaders have expressed both 1) a belief in the existential risks posed by AGI development (see image below), and 2) a need to race China nonetheless. Maybe the US is willing to incur these risks because it feels no other choice, given a fear of China - “regretful racing.” But once the US has gotten there ahead of China, it should recognize with clearer eyes that there is serious risk entailed by others developing these systems. The risks from continued AGI development might be especially large if we correctly anticipate that China feels desperate due to being behind: China might be more inclined in that case to cut corners and to develop AGI without important guardrails.11

Containing Chinese AI might depend on a dominant, decisive lead

One approach to containment is to offer economic benefits in return for yielding

One method for achieving containment is to have a dominant, decisive lead. You can use this lead to extract a resignation, at terms you and your counterparty find favorable. For instance, a leading state might offer economic upside to the weaker party (“inducements”), in return for not having to worry about the risks of losing a continued head-on competition.

Anthropic CEO Dario Amodei has suggested12 this strategy, for instance, in which the US seeks to,

“use AI to achieve robust military superiority (the stick) while at the same time offering to distribute the benefits of powerful AI (the carrot) to a wider … group of countries … . The coalition would aim to gain the support of more and more of the world, isolating our worst adversaries and eventually putting them in a position where they are better off taking the same bargain as the rest of the world: give up competing with democracies in order to receive all the benefits and not fight a superior foe.” [emphasis mine]

I think of this like negotiating a prize-split as the final two players at a poker tournament. Poker isn’t a race to be the first to a certain number of chips; it’s about making your opponent yield, either by taking all their chips if necessary, or by finding mutually agreeable terms at which you can each agree to split the prize pool.13

If one country has a clear and dominant lead on AI development, maybe they can in fact induce the other to yield. But I expect this to be very difficult.

These inducements might require an extremely dominant lead

Even if the US has “won” the race to some AI capability level, we should expect it to be very difficult to convert this to enduring peace and an end of the competition. “Winning” the AI race would likely require not just beating China to some capability, but doing so with a dominant lead. The more important that the US and China believe the race to be, the more dominant a position must be to get the other to cave, as conceding defeat has higher stakes.

What lead would be decisive enough that China sees no better choice than to yield? Consider that China might believe AGI to be critical to its military strength for decades to come. Today China has considerable leverage in any negotiation (it is a nuclear state, with the ability to retaliate against even the most extreme military actions).14 For the US to get China to yield on a technology it considers so critical, the US would seem to need an overwhelming lead and the related power - certainly not a “photo finish.”

If the competition is close, there might not be a findable win-win compromise. Perhaps each state has secret information about the powerful tactics and strategies they’d pull out in a real moment of desperation. Perhaps there is an individual political cost to yielding, and so a leader won’t accept an otherwise reasonable deal.

More generally, negotiating a compromise might be off the table once the race has heated up. And if negotiation isn’t a credible route, states should expect the other to compete more and more aggressively: The losing state’s back is up against the wall, and if the stakes are high enough (this is why they’re racing, after all) the worst that can happen is that they lose.

Policy implications of a containment approach to Chinese AGI

I recommend a number of policy priorities based on the “contain and verify” reframe:

It’s important to build verifiable non-development treaties as soon as possible.

We should be focused on decelerating China’s pursuit of AGI, potentially even if it requires decelerating our own AGI development.

We should be investing much more heavily in “hardening” technologies that reduce vulnerability to powerful AI, perhaps even ones that help adversaries be less vulnerable.

Verifiable non-development treaties

Suppose the US has beaten China to some capability threshold, and now wants to make sure China stops on its own development.

What would this treaty propose, and how can adherence - that China has stopped developing AGI - be verified/enforced?

The goal state for all international AI treaties is to figure out a treaty protocol that does not depend on trust - and in fact, must even survive mistrust.

You might believe that the other party would actively cheat and deceive you if they could, but the protocol is strong enough that you know they can’t. No double-crosses are possible.

“Compute governance” is one particularly important avenue to consider: Can countries verify what compute others have access to, and what they are able to do with said compute in building AI systems?15 Ultimately, I don’t have specific answers on how to do this, but I think it’s way under-invested as a research area. Otherwise, even if the US does beat China in racing to some capability level, the endgame is far from complete; China might still come back in the competition.

Treaties likely won’t be immediately straightforward. Given this, I’d prefer we get started on these questions right away, while the stakes are hopefully lower - before each state has developed even stronger AI systems that increase the risks from conflict.

A route to cooperation ahead of time?

Suppose you buy that the US - having “won” the race - will want China to sign a treaty that requires China not to pursue AGI. (Or perhaps will want China to sign that it’ll pursue AGI only in tandem with the US, as part of an international collaboration.)

Notice that this has many properties in common with how we might cooperate with China today to mutually avoid a risky race. Instead of trying to race to a dominant enough lead where the US manages to actively contain China, the US and China might be able to agree to mutual containment upfront.

Imagine the future scenarios:

A difficult one: Perhaps it isn’t possible to verify that China has yielded on building AGI. In this case, we also can't be confident that we've really won the competition because China could be continuing to do things to leapfrog us, or undermine our advantage. If we can’t verify that China has yielded (or otherwise can’t succeed at building AGI), then we haven’t actually won. The competition goes on.

A more promising one: Perhaps it is possible to verify that China has yielded on building AGI. In that case, we can begin pursuing a treaty ahead of the race, using this verification method. There might be ways to mutually halt or govern the pursuit of AGI16 in a way that benefits our mutual interests. Maybe the US and China can't agree on whether it's in their interests. But if they can and it's possible to verify, this seems fruitful. The competition can then be averted ahead of time.17

Unfortunately, if we don’t pursue such a treaty until the race has further heated up, we’ll need to contend with another difficult dynamic: Countries might be more reluctant to sign if this is viewed as having lost the competition, compared to a decision to mutually de-escalate the stakes upfront.

Decelerating China’s progress

Slowing down China - not just accelerating oneself toward the “finish line” - is particularly important in the containment frame, using tactics like export controls and sanctions. If our goal is to contain Chinese development of AGI, it's important we recognize that accelerating our own progress might even be counterproductive, relative to finding ways to cooperate.

Accelerating our own progress might cause China to feel threatened. Given the stakes the US has expressed for the AI race, China has good reason to fear the US getting to a very strong level of AI abilities. This could be much worse for China than the risky-but-relatively-stable nuclear deterrence dynamics of today. Admittedly, it might be too late to convince China that neither of us needs to aggressively pursue AGI - but some factors may still make a difference. In particular, the higher the stakes and the faster we go, seemingly the higher risk of conflict in Taiwan (over control of GPUs) as China feels like their back is against a wall.18

Accelerating our own progress allows China to draft off our progress. In particular, US AI development efforts are spurring tons of capital into chip development, new energy sources, algorithmic improvements, and so on, which make it easier for China to ultimately create its own powerful AI, absent other constraints. It is difficult to specifically accelerate US progress without also accelerating China’s progress.

As some analysts have noted, I think many US actions today appear likely to be aiding Chinese AI development efforts, rather than hindering them. We should reconsider these approaches.

Investing more heavily in “hardening” the world against AGI

The US should be investing more heavily in technologies to “harden” the world against risks of powerful AI (for instance, defensive tools to patch vulnerabilities in important pieces of software, which AI might otherwise exploit).

I see three main rationales for these investments:

Even if the US gets to some powerful AI level before China, China may still get there in the end, and we want to have fewer vulnerabilities.

Stronger “hardening” technologies might lower the temperature of the race, particularly if other states can also use them. There might be less impetus to race if the consequences of losing the race are less bad.

Sharing defensive applications of AI might also credibly signal more trust and cooperation, by reducing our power over other states if we were to develop AGI. In other words, they might be a good confidence-building measure between parties who otherwise mistrust each other.19

Wrapping up

The containment approach to the US-China AI competition demands a different approach than racing: investing in mutually beneficial and verifiable non-development treaties; focusing on decelerating other states’ progress; and “hardening” the world against the risks of powerful AI.

Moreover, we should be clear with ourselves about the endgame of this competition. If containment is the goal, our measures of success should reflect this: going beyond improving our capabilities, and making sure we can credibly stop others from developing powerful AI.

Continuing to pursue aggressive AGI development might be against both the US’s and China’s interests. The stakes are going to keep getting higher, and we need to look for ways to turn down the temperature.

But if we’re going to proceed with all-out AI competition, despite the chance of conflict, it’s important that the US be on the best footing possible with its allies. We can pursue improved alliances regardless of what’s happening in AI development: looking for ways to reinforce strong trading relationships, among other tactics.

Acknowledgements: Thank you to Alan Rozenshtein, Alex Mallen, Dan Alessandro, Henry Sleight, Justis Mills, Michael Adler, Nicole Ross, Sam Chase, and Severin Field for helpful comments and discussion. The views expressed here are my own and do not imply endorsement by any other party. All of my writing and analysis is based solely on publicly available information.

If you enjoyed the article, please share it around; I’d appreciate it a lot. If you would like to suggest a possible topic or otherwise connect with me, please get in touch here.

I use “AGI” and “powerful AI” as synonyms in this piece. It’s true that AGI (artificial general intelligence) isn’t very well-defined: For instance, is AGI about comparing the abilities of AI systems to typical people, expert people, or something in between? For what percentage of tasks does an AI system need to achieve parity (or superiority) to count as AGI? We don’t know exactly where the crossover happens to some very powerful system. For instance, beyond AGI is even the concept of superintelligence (or ASI - artificial superintelligence). When I use these terms, you might want to substitute in “AI that is capable enough to be really consequential,” even if we don’t know exactly what that threshold is.

It is also noteworthy that there are many types of AI that nations care about beyond the “generative AI” of systems like GPT-4. The competition between the US and China is not just about generative systems - or even just about their recent successors in “agents” that can autonomously take actions in the world. Still, these frontier AI systems are becoming capable at a wider range of tasks that might have required specialized AI systems previously (like interpreting audio and visual files). Accordingly, I don’t distinguish much between different types of AI in this piece.

People have argued a number of rationales for racing China on AI:

Military power. AI might increase the strength of a country’s military, which gives it more bargaining power when negotiating conflict, and better odds of victory in an actual conflict.

Military defense. AI might help a state to defend itself against others that have tapped into advanced offensive capabilities.

Economic prosperity. AI might usher in a technological revolution akin to a new internet or even moreso. This could create a lot of wealth for countries whose companies capitalize on the opportunity.

Economic soft power. The US wants its allies to have options to “Buy American” on AI without having to settle for an inferior technology. Otherwise, if they use Chinese AI (because it is superior to US AI), then this might strengthen allies’ relationships with and dependence on China.

Cultural prominence. By being the builders of the AI system around the world, others use AI systems that draw upon our values and worldview. (This is similar to the soft power considerations.)

Resisting authoritarianism. Some have argued (correctly, in my opinion) that strong AI might help authoritarians to retain power in their country, by amping up their surveillance capabilities, making it more difficult for dissidents to coordinate, and reducing the authoritarian’s reliance on human labor to carry out their bidding.

Besides the military factors discussed in the body of the article, I believe the strongest rationale for competing with China is economic soft power. This has parallels to recent telecom revolutions, in which China increased other countries’ economic dependence by building 5G telecom networks around the world (e.g., through its Digital Silk Road initiative).

Even if one accepts the rationale of economic soft power, however, the endgame of the competition still looks very different from “racing to be the first to a certain level of AI capability.” Instead, the endgame is about locking in enduring relationships with other countries and having them make commitments of some sort to build atop the US’s AI technology stack. (I might explore these dynamics more deeply in a future post.)

Among other discussion of these topics, former Secretary of Defense Mark Esper has said:

Advances in AI have the potential to change the character of warfare for generations to come. Whichever nation harnesses AI first will have a decisive advantage on the battlefield for many, many years. We have to get there first. Future wars will be fought not just on the land and in the sea, as they have for thousands of years, or in the air, as they have for the past century, but also in outer space and cyberspace, in unprecedented ways. AI has the potential to transform warfare in all of these domains.

Former National Security Advisor Jake Sullivan has said:

Imagine how AI will impact areas where we’re already seeing paradigm shifts, from nuclear physics to rocketry to stealth, or how it could impact areas of competition that may not have yet matured, that we actually can’t even imagine, just as the early Cold Warriors could not really have imagined today’s cyber operations.

Put simply, a specific AI application that we’re trying to solve for today in the intelligence or military or commercial domains could look fundamentally different six weeks from now, let alone six months from now, or a year from now, or six years from now. The speed of change in this area is breathtaking.

Elon Musk tweeted back in 2017, for instance, that he believes AI competition between nations is the most likely eventual cause of World War 3. This came in response to Vladimir Putin asserting that whichever nation leads on AI “will become the ruler of the world.” Assertions like this can sometimes reflect posturing. But I think there’s substantial truth in the actual and perceived importance of AI for a nation’s power.

Recent analysis has put more meat to these arguments: The “Superintelligence Strategy” report from Dan Henrycks, Eric Schmidt, and Alexandr Wang, for instance, argues that states will have powerful incentives to sabotage others’ AI projects, given the immense power that a state could gain through a monopoly on AGI.

By “regretful racing,” I mean the idea that we would prefer not to race if we could credibly coordinate. But in the absence of this credible coordination, our best action, regretfully, is to engage in the race. For instance, even if both states would prefer that neither of them have powerful AI, they can’t coordinate on this. So it ends up being in their interests to pursue powerful AI to defend against the other potentially being aggressive.

This is a classic coordination failure, to use the term from game theory. In “Verifiable non-development treaties,” I describe what sufficient coordination would look like to potentially mutually halt this race.

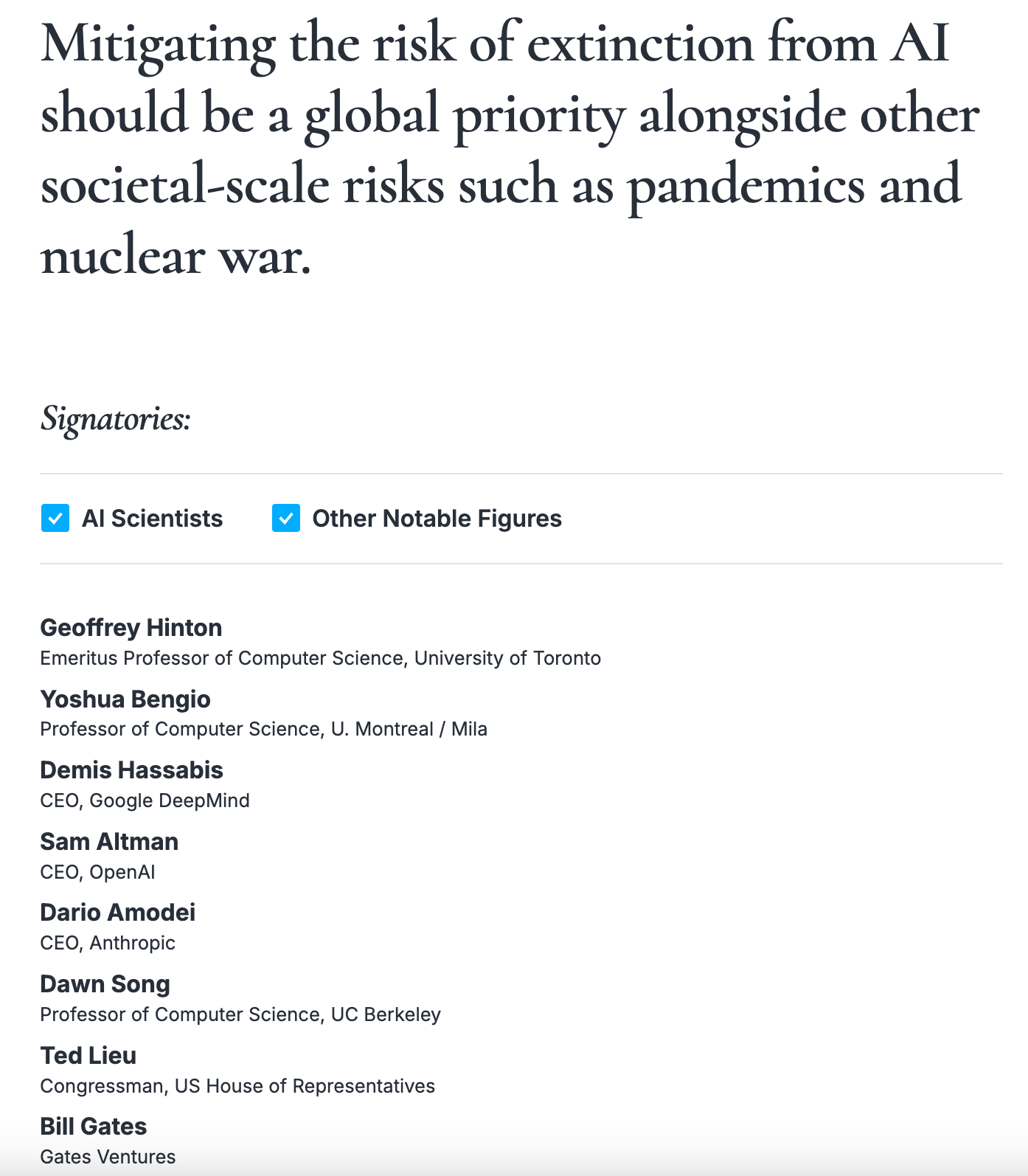

In May 2023, a number of world leaders on AI released a statement clarifying their view that “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.” Signatories include Dario Amodei (CEO, Anthropic), Demis Hassabis (CEO, Google DeepMind), and Sam Altman (CEO, OpenAI). For a primer on possible risks of powerful AI systems, I recommend this. For a fuller treatment, see this.

Though I don’t justify it here, I believe that holding an indefinite lead is not assured even if the US creates a model that can recursively self-improve (make itself stronger over time) - which carries a whole bunch of other risks.

OpenAI has alleged that DeepSeek’s R1 reasoning model was trained in part by drafting off OpenAI’s existing models.

It is true that a country that is rushing might forego even well-established guardrails. It is also worth noting, however, that no AI developer believes they have yet found guardrails strong enough to ensure the safety of building an AGI system, even if not rushing.

Sometimes, a poker tournament ends with one player taking all of the other player’s chips. In this case, they’d get the respective 1st and 2nd place prizes, which could be very different. But commonly the two players will avoid this more extreme outcome and reach a compromise to “chop” the prize funds: The leading player is willing to give up a bit of upside to avoid the risk that the other player comes back from their deficit. The trailing player is willing to accept a buy-out, with a bit of upside relative to the likely outcome from losing naturally (having all of one’s chips taken).

Other states might have significant leverage in the AI competition as well; the dynamics are more complicated than just US vs. China, though I center that duo in the piece.

A treaty could involve mutually limiting our development of advanced AI, or could involve mutually committing not to cut certain corners on safety. For instance, the duration of a minimum testing period for frontier AI systems might be one possible safety standard.

Notice that this still does not ensure that an AI catastrophe is avoided. Perhaps the safety practices that can be verifiably adopted are still not sufficient practices for the US or China to safely manage the powerful AI systems they develop. But this is a significant start.

One catch: it might be possible to verify China having yielded only if you already have AGI yourself, but I am relatively skeptical of this.

This is a speculative empirical claim, and so it’s hard to know for sure, but consider the thought experiment: Imagine that the US is believed to a year away from powerful AI, compared to a decade away. In which world is China more likely to try to cut the US off from Taiwan? I think it’s much more likely in the “AGI soon” world, where China is down to only a few tactics left to stop the US from getting there first.

Terrific angle. If China also looks at AI development through this lens, escalation in Taiwan seems imminent. The US has only indigenized a single-digit % of leading-edge semi capacity. Furthermore, China is likely to also scale up what is already arguably the most well resourced (cyber)espionage program in the world. Should the US somehow defend against exfil, algorithmic unlocks will still swiftly flow out of America and into leading Chinese projects.

Containing China's development of powerful AI systems will hinge first on deterring escalation in Taiwan and cracking down on economic espionage. America's lead in stack size, and with it its leverage, erodes in the face of each of these risks. These two areas will be vital proving grounds for American diplomacy as it hopefully builds up the muscles needed for AI non-proliferation.

I appreciate this analysis, but I'm worried about how it might be interpreted. You make a case for mutual deceleration and cooperation, but I think the framing could lead to misunderstandings.

The early focus on military threats and why "beating China" matters, combined with the "containment" language and poker analogies, could give casual readers the wrong takeaway. Someone skimming this whose main impression is "China getting AGI first would be catastrophic" could easily conclude "therefore we need to race to AGI as fast as possible and make sure China never gets close".

Your actual interesting policy recommendations come much later. But I worry that message gets lost in all the competitive framing upfront.

Maybe this is exactly how you intended to structure the argument, since you do seem a lot more open to racing in case cooperation fails than I am. But I can't help thinking that in our current environment, anything that starts with "here's why we can't let China win" risks dangerously reinforcing the racing mindset.

I tend to think slowing down makes sense regardless of what China does, so perhaps I'm just more sensitive to language that might be interpreted as pro-racing. But given how high the stakes are, I think the messaging on this stuff really matters.